When you press a button on your iPhone, you expect it to click. Not just sound - but that little pushback under your thumb. That feeling? That’s not just convenience. It’s communication. And for people who can’t rely on sight, it’s often the only way to know if an action worked. Apple’s move toward solid-state haptic buttons isn’t just about making devices sleeker. It’s about building interfaces that speak to everyone - even when they can’t see them.

Why Haptics Matter More Than You Think

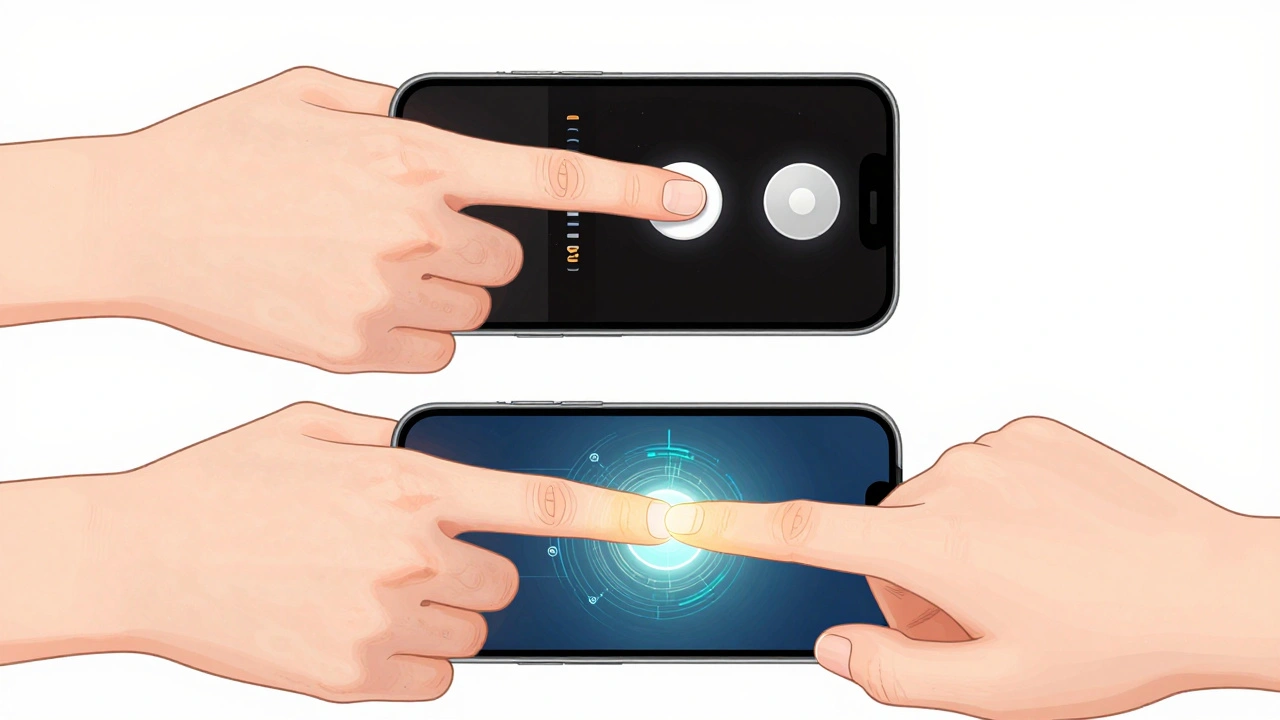

Most people think haptics are just fancy vibrations. But think about it: when you mute your phone in a meeting, you don’t look at the screen. You feel the button. When you adjust volume in your pocket, you don’t see the icons. You feel the resistance. For users with low vision or no vision, these tactile cues aren’t optional - they’re essential. Apple’s shift away from physical buttons isn’t about removing something. It’s about replacing it with something better: consistent, programmable, reliable feedback that works whether you’re blind, in bright sunlight, or just wearing gloves.The Tech Behind the Feeling

Apple isn’t using old-school vibration motors. The new system relies on two advanced technologies: piezoelectric actuators and voice coil motors (VCMs). Piezoelectric actuators deliver ultra-fast, crisp feedback - like the snap of a mechanical switch. They’re small enough to fit under each button and can be tuned to simulate different pressures. A light press might feel like a soft tap. A firm press? A sharp, distinct click. This level of control lets users tell the difference between actions without looking. Voice coil motors, on the other hand, are simpler and cheaper. They work like tiny speakers, vibrating along one axis to create a strong, clear pulse. Think of them as the workhorse of haptics - good enough for volume buttons, but not quite as precise as piezoelectric ones. Apple’s going to use both, depending on where they’re placed. The camera button? Piezoelectric. The power button? VCM. It’s not about cost. It’s about matching the right feedback to the right task.How Apple Is Rolling It Out

This isn’t happening overnight. Apple’s been testing this for years. The original idea, code-named "Project Bongo," was scrapped for the iPhone 15 Pro in 2023 because the feedback felt too artificial. Users said it felt like pressing a flat piece of metal. Not a button. Just a surface. So Apple went back to the lab. By 2026, with the iPhone 18, Apple will start small: removing the capacitive layer from the Camera Control button but keeping pressure sensitivity. This lets users press halfway to focus - just like a real DSLR - without any physical movement. Then, in 2027, with the iPhone 20, all buttons will switch to solid-state haptics. That includes the power button, volume rocker, Action button, and even the side controls on the Apple Watch. Why wait? Because Apple knows if the feedback doesn’t feel real, people will reject it. They’ve learned this from the MacBook trackpad. When they first replaced mechanical click pads with force touch, users complained it felt "dead." Then Apple added Taptic Engine vibrations that mimicked the click. Suddenly, it felt natural. That’s the standard they’re holding themselves to now.

It’s Not Just Phones

Apple’s vision goes beyond iPhones. The same tech will appear on iPads - think pressure-sensitive edges that change function based on which app you’re in. On the Apple Watch, buttons could become context-aware. A single edge control could act as a shutter button in the camera app, a play/pause button in music, or a shortcut to your favorite contact in Messages. No more physical buttons cluttering the side. Just a smooth surface that responds differently depending on what you need. Even Apple’s future devices - like smart glasses or hearing aids - could use this. Imagine a pair of AR glasses where a subtle tap on the frame silences notifications. Or a hearing aid that vibrates gently when someone is speaking your name. Haptics become the silent language of interaction.Why This Is Inclusive Design

Inclusive design isn’t about adding features for disabled users. It’s about designing for everyone from the start. Solid-state haptics do exactly that. - People with low vision rely on tactile feedback to navigate. A button that vibrates differently for each function gives them clear, immediate cues. - People with motor impairments benefit from consistent resistance. No more worn-out buttons that stick or fail to register. - People in bright light, dark rooms, or with wet hands don’t need to see the screen. They feel it. - Even sighted users prefer it. Studies show users complete tasks faster with clear haptic feedback than with visual-only confirmation. Apple’s move isn’t about innovation for innovation’s sake. It’s about removing barriers. Every button replaced with haptics is a step toward a world where technology doesn’t require sight to work.

What This Means for the Future

The global haptics market is expected to hit $10.5 billion by 2030. But Apple isn’t just riding that wave - they’re steering it. By making haptics core to every device, they’re setting the standard. Other companies will follow. And when they do, the entire tech industry will start thinking differently about interaction. Imagine a smart fridge that vibrates when your milk is running low. A car dashboard that taps your arm when a lane change is unsafe. A wearable that pulses to remind you to breathe. These aren’t sci-fi dreams. They’re the next step - and Apple’s haptic system is the blueprint.What’s Still Unresolved

There are still challenges. Can haptics really replace the satisfying click of a physical button? Can they last 5 years without wearing out? Can they be calibrated perfectly for every hand size and pressure sensitivity? Apple’s history suggests they won’t release this until it’s flawless. They canceled it twice. That’s not indecision. That’s discipline. They’d rather delay than disappoint. And that’s why, when it finally launches, it won’t just be a new feature. It’ll feel like the button you never knew you were missing.Are Apple’s haptic buttons the same as vibration?

No. Vibration is broad and random - like your phone buzzing in your pocket. Haptic feedback is precise, directional, and timed. It’s designed to mimic the exact sensation of pressing a physical button - a sharp tap, a firm click, or a soft resistance - depending on what you’re doing. It’s not just shaking. It’s speaking.

Will this help blind users?

Yes - and that’s the point. Blind and low-vision users rely on tactile feedback to confirm actions. With haptic buttons, they’ll know when a photo is taken, when the volume changed, or when a call ended - without needing to hear a sound or see a screen. Apple’s design ensures each button has a unique, recognizable pulse, making navigation intuitive and independent.

Why did Apple cancel this twice?

Because the feedback didn’t feel real. Early versions felt like pressing flat metal - no resistance, no snap, no confirmation. Users said it was confusing. Apple’s standards are high: if it doesn’t feel like a button, they won’t ship it. They’ve spent years refining the actuators, tuning the pressure curves, and testing with real users. The next version won’t just work - it’ll feel natural.

Can this work on other devices like laptops or cars?

Absolutely. Apple’s haptic tech is already being tested in MacBooks with the Taptic Engine. The same principles apply to car dashboards, smart home panels, and medical devices. Any surface - glass, metal, plastic - can become interactive with the right sensors and actuators. This isn’t just an iPhone thing. It’s the future of how all devices respond to touch.

Is this just for Apple products?

No. Apple’s implementation will set the standard. Companies like Microsoft, Samsung, and automotive brands are already watching. When Apple ships this at scale, others will follow. The technology is open - the patents are public. What Apple proves is that haptics can be reliable, precise, and user-friendly. Once that’s shown, the rest of the industry will adopt it.

Categories

Popular Articles